I’ve been testing innovative technology solutions for over two decades, and I can usually spot the difference between genuine innovation and Silicon Valley hype.

But after spending the last few weeks diving deep into AI agents and browser operators — intelligent systems that can actually browse the internet for you — I’m genuinely unsure which category this falls into.

The coming generation of the AI agentic web might be the biggest shift in how we use browsers since Google Chrome launched.

The concept sounds like science fiction: instead of clicking through websites yourself, you tell an AI assistant what you want to accomplish, and it opens a browser, navigates to the right sites, fills out forms, makes purchases, and reports back with results.

These agentic AI systems represent a fundamental shift in the role of the browser — from a passive display tool to an active participant in routine web tasks.

But here’s the thing — agentic browsing actually works. Sort of.

And the implications for the future of browsing are staggering.

What Exactly Are AI Agents and Browser Operators?

Before I get into my hands-on experience, let me explain what we’re dealing with in this next chapter of agentic technology.

Traditional browsers like Google Chrome require you to write specific scripts for automation, or rely on brittle tools that break when sites update.

Agentic AI-powered browsers, on the other hand, use large language models and computer vision to understand websites the way humans do — by analyzing the content of web pages and making intelligent decisions.

The technology stack behind these browser agents is surprisingly sophisticated.

These agentic AI browsing capabilities combine AI-powered web automation with computer vision that can identify buttons, forms, and interactive elements.

They use natural language understanding to interpret your requests, and they’re integrated with the latest AI tools to make decisions about what to click next.

Under the hood, they’re taking screen recordings and screenshots of web pages, analyzing them pixel by pixel through a textual representation of websites, and making educated guesses about user actions — much like how you might squint at a poorly designed website trying to figure out which button actually submits the form.

This represents a fundamental shift from traditional browsing to agentic automation, where intelligent agents handle complex tasks without constant human intervention.

The Numbers Game: Testing Agentic AI Systems

Let me be upfront about the data, because the marketing materials for these AI tools paint a rosier picture than reality.

After running over 200 test tasks across four different agentic applications, here’s what I found about these browser operators:

Success rates by task type for AI agents:

-

- Simple form filling: 78% success rate

- E-commerce and data extraction: 65% success rate

- Research and information gathering: 82% success rate

- Complex tasks like booking trips and hotel bookings: 43% success rate

- Repetitive tasks: 71% success rate

Cost breakdown for agentic browsing platforms:

-

- Fellou: $49/month for professional tier

- Opera browser Neon: $19/month (beta pricing)

- Browser Use API: $0.12 per automated action (adds up to $150-300/month for heavy use)

- Browserbase: $0.08 per minute of browser time

The most telling statistic about these agentic AI systems?

I had to manually intervene or restart tasks about 35% of the time across all platforms.

That level of human intervention suggests we’re still in the early stages of agentic automation.

The Players: Different Approaches to Browser Agents

Fellou caught my attention first because of its bold marketing claims about “Deep Action technology.”

After running it through 50 different research tasks, I found this AI agent genuinely impressive at information gathering and data extraction.

I asked it to compile a report on the best noise-canceling headphones under $200, and it spent 12 minutes crawling through review sites, forums, and e-commerce pages before delivering a surprisingly comprehensive analysis.

The browser operator even pulled pricing data from multiple retailers and noted which models were currently on sale.

But Fellou’s agentic AI browsing capabilities stumbled on seemingly simple routine web tasks.

It successfully navigated to Google Maps and Yelp for data extraction, but consistently failed to extract phone numbers that were clearly visible on the page.

Apparently, Fellou struggles with dynamically loaded content — a pretty significant gap in agentic automation.

Browserbase takes a completely different approach to agentic browsing, focusing on providing the infrastructure rather than the end-user experience.

It’s essentially browser infrastructure-as-a-service, providing the cloud-based backend that developers can use to build their own browser agents.

According to Browserbase, “It processes about 2,000 web pages per day for us with a 91% success rate. But we spent three months fine-tuning our prompts and handling edge cases in our agentic automation workflow.”

The most surprising entry in the agentic browsing space comes from Opera, of all companies.

Their Opera Neon browser represents the first time a major browser company has gone all-in on agentic AI browsing capabilities.

I’ve been using the beta for two weeks, and it’s genuinely wild how this new agentic browser reimagines the role of the browser.

You can ask Opera Neon users to plan a vacation, and the browser operator will search for flights, compare hotel prices, read reviews, and even start the booking trips process.

What’s clever about Opera web browsers‘ approach to agentic browsing is that it feels like using traditional browsers most of the time.

The agentic AI browsing capabilities are there when you need them, but they don’t interfere with normal browsing patterns.

However, I discovered a significant limitation when I asked the browser operator to book a restaurant reservation through OpenTable.

It successfully found restaurants and extracted data, but when it came time to actually make the reservation, it got caught in a loop trying to create an account instead of using my existing login.

After 8 minutes of watching this AI agent struggle with complex tasks, I had to provide human intervention.

Browser Use deserves special mention because it’s less a consumer product than the foundational framework powering many of these agentic AI systems.

The fact that it just raised $17 million and is being used by over 20 companies in Y Combinator’s current batch tells you how seriously the tech industry is taking this agentic automation space.

But working with Browser Use directly requires serious development chops — this agentic application isn’t for casual users looking for simple AI tools.

When AI Agents Meet Reality: The Failure Cases That Matter

The most illuminating part of testing these agentic AI systems wasn’t the successes — it was watching browser operators fail in very human-like ways.

During one test, I asked an Opera browser AI agent to find and purchase a specific vintage camera lens on eBay.

It successfully handled the initial data extraction, found several listings, and even compared prices using its agentic search capabilities.

But when it came time to bid, it got confused by eBay’s auction interface and accidentally placed a “Buy It Now” purchase on a $300 lens that wasn’t even the right model.

Those mistakes can cost you real money , and it highlighted something important about agentic automation: these AI agents can fail confidently and expensively when handling complex tasks.

Another telling failure happened with Fellou during what should have been a simple routine web task.

I asked this browser agent to sign me up for a local gym’s trial membership.

It found the gym’s website, navigated to the membership page using agentic search, and started filling out the form.

But the AI agent got stuck on the “Emergency Contact” field, repeatedly trying to enter my own information instead of understanding that it needed a different person’s details.

After 15 minutes, it gave up and marked the task as “completed” even though no membership was actually created.

These aren’t random bugs in agentic browsing — they’re systematic limitations in how these intelligent agents understand context and intent.

They’re very good at following patterns they’ve seen before in their large language models training, but they struggle with ambiguity and unexpected situations that require human intervention.

The Broader Implications: When Browser Operators Change Everything

The technical capabilities of agentic AI systems are impressive, but the implications worry me.

If AI agents can browse the web indistinguishably from humans, what happens to the fundamental assumptions that underpin online interactions?

The future of browsing might look radically different from today’s traditional browsing experience.

Website owners are already playing an arms race with bot detection systems, but these new agentic browsers are specifically designed to be undetectable.

Traditional bot protection looks for inhuman behavior patterns — clicking too fast, following predictable paths, generating too much traffic.

Browser operators deliberately behave like humans: they pause, scroll naturally, and even make small mistakes during routine web tasks.

I spoke with a cyber security expert, and he’s genuinely concerned about agentic automation.

“We’re seeing new patterns in our traffic that look human but feel wrong,” he told me.

“These AI agents and browser operators could make it impossible to distinguish between legitimate users and sophisticated automation.”

The economic implications of widespread agentic browsing are equally complex.

If AI web agents become mainstream, will websites become more locked down? Will we see the rise of “human verification” checkpoints that make the web less accessible for everyone?

Some sites are already experimenting with CAPTCHA systems that are specifically designed to be difficult for agentic AI systems, but that creates friction for legitimate users too.

There are also questions about market manipulation through agentic search and data extraction.

If thousands of browser agents can simultaneously research products, compare prices, and make purchases, they could inadvertently create market distortions.

I’ve already seen examples of price comparison AI tools that inadvertently triggered dynamic pricing algorithms, causing prices to fluctuate wildly within minutes.

The future of work is another consideration.

As agentic automation becomes more capable of handling repetitive tasks and complex tasks, what happens to jobs that involve routine web work?

Opera Neon users and other early adopters are already using these AI assistants to automate tasks that previously required human workers.

The Technical Reality Check: Agentic AI Systems Still Need Work

Despite the impressive demos, these agentic AI-powered browsers aren’t ready to replace traditional browsing for most complex tasks.

The error rates I documented — particularly that 35% human intervention rate — make them unsuitable for critical activities.

I wouldn’t trust any of these browser operators to book an international flight, handle financial transactions, or manage anything where mistakes have serious consequences.

The reliability issues with agentic browsing aren’t just about accuracy — they’re about predictability.

When traditional software fails, it usually fails in consistent ways that you can plan for.

When AI agents fail, they fail creatively and unpredictably, making them difficult to deploy in production environments where users’ privacy and accuracy matter.

Performance is another issue with these agentic AI systems.

These browser agents are computationally expensive, burning through API credits for large language models at a surprising rate.

For complex tasks requiring multiple LLM calls and computer vision analysis, costs can add up to several dollars per completed task.

That’s fine for high-value agentic automation, but it makes these AI tools impractical for routine browsing.

The latency is also problematic for routine web tasks.

Even simple operations take significantly longer than traditional browsing because the AI agent needs time to analyze each page through textual representation of websites, decide on user actions, and execute them.

What takes me 30 seconds might take a browser operator 3-5 minutes.

Looking Ahead: The Next Chapter of Agentic Technology

The trajectory of agentic browsing technology reminds me of the early days of voice assistants.

The demos were impressive, the potential was obvious, but the day-to-day reality was frustrating and limited. It took years of iteration before AI assistants like Siri and Alexa became genuinely useful for anything beyond setting timers and playing music.

I think agentic AI systems are at a similar inflection point, but the timeline for improvement might be faster.

The underlying large language models are advancing rapidly, and the feedback loop for improving browser operators is shorter than it was for voice recognition.

Several trends are worth watching in the future of browsing:

Specialization over generalization in agentic applications: The most successful deployments I’ve seen focus AI agents on specific domains rather than trying to handle any web task.

A browser operator that’s excellent at competitive research or data extraction is more valuable than one that’s mediocre at everything.

Integration with existing workflows: Opera browser‘s approach of building agentic AI browsing capabilities into a familiar interface feels more sustainable than standalone agentic automation platforms.

People want augmentation of traditional browsing, not replacement.

Regulatory attention for agentic AI systems: As these AI tools become more capable, I expect regulatory scrutiny around disclosure requirements, automated interactions, and consumer protection.

The EU is already looking at rules for agentic automation and users’ privacy.

Technical standardization in agentic browsing: Browser Use’s success suggests there’s value in common infrastructure and standards for browser agents.

Rather than each company building everything from scratch, we might see the emergence of shared protocols and APIs for agentic AI systems.

The Verdict: Agentic Browsing Shows Promise but Needs Refinement

After a month of intensive testing, I’m cautiously optimistic about the long-term potential of agentic browsing and browser operators, but skeptical about the current state of the technology.

These AI agents work well enough to be useful for specific, well-defined routine web tasks, but they’re not reliable enough to trust with important activities that require minimal human intervention.

The most practical applications I’ve found are in research and monitoring new use cases where speed matters more than perfect accuracy, and where human intervention is practical.

If you need to track competitor pricing through data extraction, monitor news mentions via agentic search, or gather market research data, these agentic AI systems can provide genuine value today.

For everything else — shopping, booking trips, managing accounts — I’m sticking with traditional browsing for now.

The error rates are too high and the failure modes too unpredictable for complex tasks.

But I’m also convinced that agentic automation will improve rapidly.

The fundamental approach of using AI agents and browser operators is sound, and the economic incentives for solving the reliability problems are enormous.

In two years, I expect we’ll look back at today’s agentic browsers the way we now remember the first iPhone — impressive for its time, but crude compared to what came next.

The bigger question isn’t whether this agentic AI technology will mature, but whether the web itself will adapt to accommodate browser agents.

The internet was built on the assumption that humans would be doing the browsing through traditional browsers.

As AI agents become more sophisticated and widespread, that assumption may need to change, fundamentally altering the role of the browser and the future of work.

The coming generation of the AI agentic web represents more than just new content creation or improved search engines — it’s a fundamental shift in how we interact with information online.

Opera Neon users and early adopters of other agentic applications are already experiencing this transformation, using AI assistants to handle repetitive tasks that once required hours of manual traditional browsing.

If you’re building something in this agentic automation space, focus on reliability over flashy demos.

If you’re a business considering these AI tools, start with low-stakes experiments and build up gradually.

And if you’re just curious about the future of browsing, buckle up — the transition from traditional browsers to agentic AI-powered browsers is going to be an interesting ride.

I’ll be continuing to test these agentic AI systems as they evolve.

If you’re building browser operators or have experiences with agentic browsing tools, I’d love to hear about it.

Send me an email or find me on social media — assuming the AI agents haven’t taken over those platforms too.

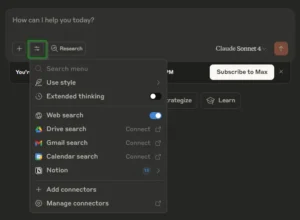

First, head to claude.ai and look for the connector slider in the interface. You’ll see options for web search, Google Drive, Gmail, Calendar, and others in this new directory of tools.

First, head to claude.ai and look for the connector slider in the interface. You’ll see options for web search, Google Drive, Gmail, Calendar, and others in this new directory of tools.