Reading time: approx. 13 minutes

Look, I’m going to level with you here.

If you think you’re going to nail effective prompts on your first attempt, you’re probably the same person who thinks they can set up a smart home without reading any documentation.

Spoiler alert: it’s not happening.

After spending way too many hours wrestling with different models — ChatGPT, Claude, Perplexity, and every other large language model that’s crossed my laptop screen — I’ve learned something crucial:

Prompt engineering techniques are a lot like debugging code, except the compiler is a black box that sometimes decides your semicolon is actually a philosophical statement about existence.

The Uncomfortable Truth About Large Language Models

Here’s what nobody tells you when you first start playing with these AI tools: they’re incredibly powerful and frustratingly unpredictable at the same time.

It’s like having a sports car with a manual transmission written in natural language you only sort of speak.

Sure, you can get it moving, but good luck getting well-crafted prompts that consistently deliver your desired outcome.

The problem is that most people approach prompt engineering like they’re typing into Google.

They throw in some keywords, hit enter, and expect magic.

But AI prompt systems aren’t search engines – they’re more like that really smart friend who gives great advice but sometimes goes off on weird tangents about their childhood pets when you just wanted the right prompt for specific tasks.

Most prompt engineers learn this the hard way: there’s no shortcut to good prompts.

You need to understand the iterative process of refining your approach, testing different prompt engineering techniques across various use cases, and gradually building up your prompt engineering skills through systematic experimentation to achieve better results.

Starting With Direct Instruction and Best Practices (Even Though You Want to Skip the Basics)

Before we dive into advanced prompting techniques, let’s talk fundamentals and best practices.

I know, I know – you want to jump straight to chain-of-thought prompting and zero-shot prompting.

But trust me, I’ve seen too many people try to run elaborate few-shot prompting chains before they can write a basic direct instruction that actually works.

Effective prompt engineering starts with understanding that your AI prompt needs three things: it needs to be clear about what you want, specific about how you want it, and it needs enough specific information that the AI doesn’t have to guess what you’re thinking.

Think of it like writing instructions for someone who’s incredibly capable but has never seen your particular use case before.

For example, instead of “write a product review,” try creating a specific prompt like: “write a 300-word review of the iPhone 15 Pro focusing on camera improvements, written for a tech-savvy audience who already owns the iPhone 14 Pro.”

See the difference?

One leaves the AI guessing; the other gives it specific information and a clear target for your desired outcome.

This is where prompt design really matters.

Well-crafted prompts aren’t just about being detailed – they’re about providing the right framework for large language models to understand your intent and deliver exactly what you need for specific tasks.

When working with different models, you’ll notice that each one responds slightly differently to the same prompt engineering techniques.

What works perfectly with one model might need adjustment for another, which is why understanding these best practices becomes so crucial for prompt engineers.

The Real Process: The Iterative Process of Optimizing Prompts

Now here’s where things get interesting, and where most people give up.

The secret to effective prompt engineering isn’t writing the perfect prompt on your first try – it’s getting comfortable with the iterative process of refinement and understanding that your first attempt will probably be mediocre, and that’s completely fine.

I treat prompt engineering like I treat reviewing gadgets: start with the basics, identify what’s not working, then systematically improve each piece through advanced prompting techniques.

It’s the same methodology I use when I’m testing a new phone or laptop, except instead of benchmark scores, I’m looking at whether my prompt engineering techniques actually produced better results for my specific use case.

Here’s my typical iterative process:

-

- I start with a simple, straightforward AI prompt and run it a few times across different models.

- Then I look at what went wrong:

- Did it miss the tone I wanted?

- Did it include information I didn’t ask for?

- Did it completely misunderstand the assignment?

- Each failure point becomes a specific thing to fix in the next iteration, building toward more effective prompts through systematic improvement.

Let me give you a real example of this iterative process in action.

I was trying to get Claude to help me write product comparison charts — a specific use case that required careful prompt design.

My first prompt was something like “compare these two phones.”

The result was… technically correct but completely useless for my actual needs.

It gave me a generic comparison that could have been written by someone who’d never used either device – definitely not the effective prompt engineering I was aiming for.

So I began the iterative process of refinement, following best practices for providing examples and specific information.

Version two used more advanced prompting techniques: “Create a detailed comparison chart between the iPhone 15 Pro and Samsung Galaxy S24 Ultra, focusing on features that matter to power users: camera quality, performance, battery life, and ecosystem integration. Format as a table with clear pros and cons for each category.”

Better results, but still not quite right.

The tone was too formal, and it wasn’t capturing the kind of practical insights I actually put in my reviews.

Version three added more specific information and context about my desired outcome: “Write this comparison from the perspective of a tech reviewer who has used both devices extensively and is addressing an audience of enthusiasts who want honest, practical advice.”

That’s when my prompt engineering techniques finally clicked with this particular use case.

The AI started producing content that actually sounded like something I would write, with the kind of nuanced takes that come from real-world usage rather than spec sheet comparisons.

This is what effective prompt engineering looks like in practice – not perfection on the first try, but systematic improvement through the iterative process until you achieve your desired outcome.

Getting Systematic About Prompt Engineering Skills and Best Practices

Once you accept that the iterative process is inevitable, you might as well get good at optimizing prompts systematically.

I’ve started keeping a simple document where I track what I change and why in my prompt design — essentially creating my own prompt templates for different use cases.

It’s not fancy – just a running log of “tried this specific prompt, got that result, changing X because Y.”

This documentation habit has saved me countless hours and dramatically improved my prompt engineering skills across different models.

Instead of starting from scratch every time I need a similar AI prompt for specific tasks, I can look back at what worked and what didn’t.

It’s like keeping notes on which camera settings work best in different lighting conditions – eventually, you build up a library of effective prompting techniques that actually deliver better results.

The key is being systematic about testing your prompt engineering techniques.

Don’t just run your AI prompt once and call it good.

Try it multiple times, with different inputs if relevant, and test it across different models when possible.

Large language models can have surprisingly variable outputs, and what works great once might completely fail the next time if you haven’t nailed down the right prompt structure through proper prompt design.

Many prompt engineers make the mistake of not testing their work thoroughly enough across various use cases.

They create what seems like a good prompt, get one decent result, and assume they’ve mastered effective prompt engineering.

But real prompt engineering skills come from understanding how your prompts perform across different scenarios, edge cases, and models to consistently achieve your desired outcome.

Advanced Prompting Techniques and Best Practices (For When You’re Ready)

Once you’ve got the basics of effective prompt engineering down, there are some more sophisticated approaches worth exploring.

Chain-of-thought prompting

Chain-of-thought prompting is particularly useful for complex specific tasks – basically, you ask the AI to show its work instead of just giving you the final answer in natural language.

For instance, instead of asking for a final verdict on whether someone should buy a particular gadget, I might use chain-of-thought prompting to ask the AI to first analyze the user’s stated needs, then evaluate how well the product meets those needs, and finally make a recommendation based on that analysis.

The intermediate steps often reveal whether large language models are actually reasoning through the problem or just pattern-matching from their training data.

Zero-shot prompting

Zero-shot prompting is another powerful technique where you give the AI specific tasks it hasn’t been specifically trained on, but provide enough context and structure that it can figure out your desired outcome.

This is different from few-shot prompting, where you focus on providing examples of the desired output format—a best practice that works particularly well when you need consistent results across different models.

Self-improving prompting

I’ve also started experimenting with prompts that include self-correction mechanisms as part of my advanced prompting techniques.

Something like “After writing your initial response, review it for accuracy and practical usefulness, then provide a revised version if needed.”

It doesn’t always work across all use cases, but when it does, the better results from this prompt engineering approach are noticeable.

The beauty of these advanced prompting techniques is that they can be combined for specific tasks.

You might use chain-of-thought prompting within a few-shot prompting framework, or incorporate direct instruction elements into your zero-shot prompting approach.

The key is understanding how these different prompt engineering techniques work together across different models to create more effective prompts that consistently deliver your desired outcome.

The Game-Changer: Self-Improving AI Systems with Claude Connectors or ChatGPT Connectors

But here’s where things get really interesting, and where the future of prompt engineering is heading.

I’ve been experimenting with Claude’s new connectors, and they’re not just another automation tool – they’re creating something I can only describe as self-improving AI systems that get smarter every time you use them for specific tasks.

Think about this: what if your prompt engineering techniques could automatically improve themselves based on what works and what doesn’t for a specific use cases?

What if your AI prompt could learn from each interaction and update its own instructions to deliver better results next time?

That’s exactly what’s happening with these new connectors when you apply best practices for prompt design.

Instead of static prompt templates that you have to manually iterate on, you can create AI systems that perform the iterative process automatically, building better effective prompts through their own experience with specific tasks.

Here’s how I’ve been setting this up:

Instead of hardcoding my prompt engineering techniques directly into Claude projects, I store them in a Notion document that Claude or ChatGPT can access and modify through its connectors.

Then I add this crucial instruction to my AI prompt:

“Important: Once the session is over, please work with the user to update these instructions based on things that were learned during the recent session.”

The result?

An AI system that doesn’t just follow your prompt design – it actively improves it for your specific use case.

After each interaction, it suggests refinements to its own prompt engineering techniques based on what delivered better results and what could be improved.

It’s like having a prompt engineer that never stops learning and optimizing for your desired outcome.

This isn’t just theoretical.

I’ve watched my research-to-social-media workflow AI prompt evolve over dozens of iterations, automatically incorporating better effective prompting techniques, refining its understanding of my style across different models, and developing more sophisticated prompt engineering skills than I could have programmed manually.

The three-phase approach that works consistently follows these best practices:

-

- Process Documentation – Document exactly what you want the AI to do for specific tasks, but store it in a connected document (Notion, Google Docs) rather than static instructions

- Creating Instructions – Convert your process into step-by-step prompt engineering techniques that keep you in the loop for approval, providing examples where needed

- Iterative Improvement – Let the AI automatically refine its own prompt design based on real-world performance and better results

What makes this different from traditional prompt engineering is that large language models become active participants in optimizing prompts rather than just following them.

They’re developing their own prompt engineering skills through experience, creating more effective prompts over time without requiring constant manual intervention from prompt engineers.

Building Your Prompt Engineering Skills: When Good Enough Actually Delivers Your Desired Outcome

Here’s something that took me way too long to learn about effective prompt engineering: you don’t need to optimize every AI prompt to perfection.

Sometimes good prompts are good enough, especially for one-off specific tasks or quick content generation use cases.

The iterative process can become addictive.

I’ve definitely fallen into the trap of spending an hour fine-tuning prompt engineering techniques for a task that only took ten minutes to complete manually.

It’s like spending all day optimizing your desktop setup instead of actually getting work done – satisfying in the moment but ultimately counterproductive to developing real prompt engineering skills.

The key is knowing when to stop optimizing prompts based on your specific use case.

If your current prompt design is producing consistently useful results and you’re not hitting major failure modes across different models, it’s probably time to move on.

Save the perfectionism for AI prompts you’ll use repeatedly or for particularly important specific tasks where effective prompt engineering really matters for better results.

This is where experienced prompt engineers separate themselves from beginners.

They understand that the goal isn’t perfect prompts – it’s effective prompts that reliably deliver the specific information or desired outcome you need.

Sometimes a simple direct instruction works better than elaborate advanced prompting techniques, and that’s perfectly fine when following best practices.

The Bigger Picture of Prompt Engineering Techniques and Best Practices

What’s really interesting about all this is how much prompt engineering resembles other kinds of technical problem-solving.

The iterative process, the systematic testing, the documentation of what works across different use cases – it’s all stuff we already do when we’re troubleshooting network issues or optimizing website performance.

The difference is that with traditional debugging, you eventually figure out the underlying system well enough to predict how it will behave.

With large language models, that level of predictability might never come.

The models are too complex, and they keep changing as companies update and improve them.

But that’s not necessarily a bad thing for prompt engineers.

It just means we need to get comfortable with a different kind of workflow – one that’s more experimental and adaptive than the linear processes we’re used to in traditional software development.

The iterative process becomes even more important when the underlying system is constantly evolving and you’re working across different models.

This is why building solid prompt engineering skills matters more than memorizing specific prompt templates.

The fundamentals of effective prompt engineering – clarity, specificity, systematic testing, and iterative improvement – these will remain relevant even as large language models themselves continue to evolve and new use cases emerge.

What’s Next for Prompt Engineers and Best Practices

The tools for prompt engineering are getting better rapidly.

We’re starting to see platforms that can automatically test prompt variations and track performance metrics for different prompt engineering techniques across various use cases.

Some can even suggest improvements based on common failure patterns in prompt design when working with specific tasks.

But the fundamental skill – being able to think clearly about your desired outcome and then systematically work toward getting it through effective prompting techniques – that’s not going away.

If anything, it’s becoming more important as these AI tools become more powerful and more widely used across different models.

The companies building large language models are getting better at making them more intuitive and user-friendly for natural language interaction.

But they’re also becoming more capable, which means the complexity ceiling for advanced prompting techniques keeps rising. Learning the iterative process effectively isn’t just about getting better results today – it’s about building the prompt engineering skills you’ll need to work with whatever comes next.

Whether you’re working with zero-shot prompting, few-shot prompting, chain-of-thought prompting, or developing entirely new prompt engineering techniques, the core best practices remain the same:

Start with clear direct instruction, test systematically across specific tasks, iterate based on results, and always focus on creating effective prompts that deliver your desired outcome rather than perfect ones.

And trust me, based on the trajectory we’re on, whatever comes next in the world of large language models and prompt engineering is going to be pretty wild.

The prompt engineers who master the iterative process now, understand how to work across different models, and follow these best practices will be the ones best positioned to take advantage of whatever advanced prompting techniques emerge next.

Ready to Build Your Own Self-Improving AI System?

If you’re tired of manually iterating on the same prompt engineering techniques over and over across different use cases, it’s time to let your AI do the heavy lifting.

Here’s your challenge:

Pick one repetitive specific task you do weekly – content creation, research synthesis, email management, whatever – and build a self-improving AI system using Claude’s or ChatGPT’s connectors following these best practices.

Start simple: document your process, convert it to instructions with providing examples where helpful, then add that magic line about updating based on learned experience.

Within a month, you’ll have an AI assistant that’s genuinely gotten better at understanding exactly what you need for your desired outcome.

Set up your first self-improving AI system in Claude →

Don’t just optimize prompts manually when you could be building AI that optimizes itself.

The prompt engineers who figure this out first are going to have a massive advantage over everyone still stuck in the manual iteration cycle across different models.

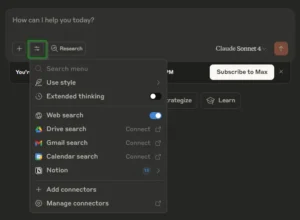

First, head to claude.ai and look for the connector slider in the interface. You’ll see options for web search, Google Drive, Gmail, Calendar, and others in this new directory of tools.

First, head to claude.ai and look for the connector slider in the interface. You’ll see options for web search, Google Drive, Gmail, Calendar, and others in this new directory of tools.